Xianghui Yang is a Senior Research Scientist at Tencent. He earned his Ph.D. from the School of Electrical & Information Engineering at The University of Sydney, where he was affiliated with the USYD-Vision Lab under the supervision of Prof. Luping Zhou, Prof. Guosheng Lin, and Prof. Wanli Ouyang. Prior to his doctoral studies, he received his B.Sc. in Physics from the School of Physics, Nanjing University in 2019. His research focuses on Foundation Models, Large-Scale AI, Multimodal Learning, and 3D Vision.

Experience

Featured Publications

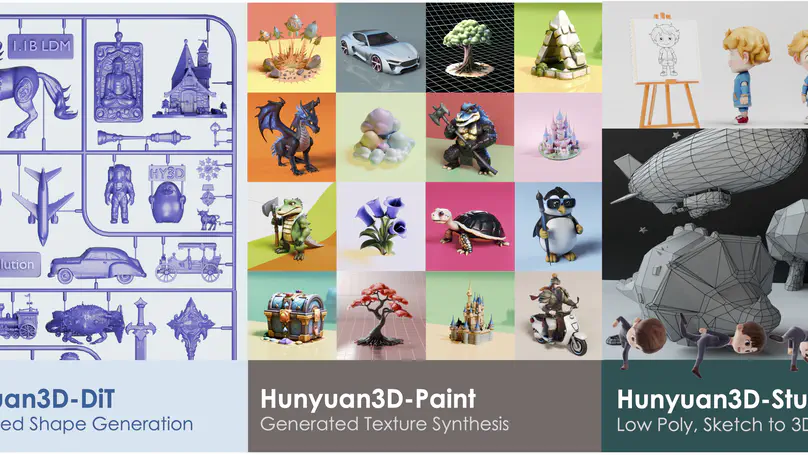

Hunyuan3D 2.0 is an advanced large-scale 3D synthesis system designed to generate high-resolution textured 3D assets. It consists of two core components Hunyuan3D-DiT, a scalable flow-based diffusion transformer for shape generation that ensures alignment with input conditions, and Hunyuan3D-Paint, a texture synthesis model that produces high-quality, vibrant textures using geometric and diffusion priors. Additionally, Hunyuan3D-Studio provides a user-friendly platform for asset creation, manipulation, and animation, catering to both professionals and amateurs. Evaluations demonstrate that Hunyuan3D 2.0 surpasses previous state-of-the-art models (both open- and closed-source) in geometry detail, condition alignment, and texture quality. The system is publicly released to advance the open-source 3D generative modeling community.

We propose a two-stage approach named Hunyuan3D-1.0 including a lite version and a standard version, that both support text- and image-conditioned generation. In the first stage, we employ a multi-view diffusion model that efficiently generates multiview RGB in approximately 4 seconds. In the second stage, we introduce a feedforward reconstruction model that rapidly and faithfully reconstructs the 3D asset given the generated multi-view images in approximately 7 seconds.